How LLMs Actually Work

Understanding the core capabilities and limitations of Large Language Models

The Truth About LLM Capabilities

Large Language Models (LLMs) like ChatGPT, Claude, and Gemini are often perceived as intelligent systems that can calculate, reason, and access real-time information. However, the reality is quite different. These models don't actually perform calculations or access external data in their native form - they're fundamentally prediction engines.

The core functionality of an LLM is to predict the next most likely token (word or part of a word) based on patterns learned from its training data. This predictive capability, while impressive, is fundamentally different from actual computation or reasoning.

In this guide, we'll explore the true nature of LLMs, their limitations, and how they use external tools to overcome their inherent constraints.

Prediction, Not Calculation

When you ask an LLM a simple arithmetic question like "What is 2 + 2?" and it correctly responds with "4," it might seem like the model is performing calculation. However, what's actually happening is pattern recognition and prediction.

User

What is 2 + 2?

LLM

2 + 2 = 4

The model has seen countless examples of "2 + 2 = 4" in its training data, so it predicts that after "What is 2 + 2?" the most likely next tokens would form the answer "4". This is fundamentally different from performing the mathematical operation of addition.

Think of it like this: the model is more like an extremely sophisticated autocomplete system that has seen a vast amount of text and can predict what usually comes after a given prompt, rather than a calculator that computes results.

Key Point

LLMs operate on the principle of prediction rather than calculation. They generate responses by predicting word sequences based on patterns in their training data, not by performing actual operations.

Inherent Limitations

Due to their nature as prediction engines, LLMs have several inherent limitations:

No Real-Time Data

LLMs cannot access current information like today's weather, stock prices, or news headlines. Their knowledge is limited to what was available in their training data, which has a specific cutoff date.

Example: If asked "What's the temperature in Delhi right now?" a pure LLM cannot provide an accurate answer without external tools.

No Actual Computation

LLMs cannot perform calculations in the traditional sense. They can predict answers to common math problems but struggle with complex or unusual calculations that weren't well-represented in their training data.

Example: While an LLM might get "12 × 12" right based on pattern recognition, it might fail on "19,387 × 6,421" without external help.

Cannot Execute Code

While LLMs can generate code, they cannot execute it themselves or observe its results. Any code-related functionality requires external tools to run the code and return results.

Example: An LLM can write a Python function to analyze data but cannot run it or see its output without an external code interpreter.

Training Data Limitations

LLMs are only as good as their training data. If the data contains errors or biases, these will be reflected in the model's predictions. This can lead to incorrect answers for questions where the training data was flawed.

Example: An LLM might incorrectly state the number of letters in a word if that word was consistently misspelled in its training data.

Extending Capabilities with External Tools

To overcome their inherent limitations, modern AI systems often integrate LLMs with external tools. These tools allow LLMs to perform tasks that would be impossible for a pure prediction model.

Code Interpreters

Allow LLMs to write and execute code to perform calculations, analyze data, or solve complex problems. The code runs in a secure environment and returns results back to the model.

Web Search

Connects LLMs to search engines, allowing them to retrieve current information from the internet and incorporate it into their responses.

Specialized APIs

Provide access to specific data sources or services, such as weather forecasts, financial data, or translation services to enhance the LLM's capabilities.

From Gemini: On Using External Tools

For simple, straightforward arithmetic, I can often provide the answer as a pure LLM by drawing on the patterns I learned during training.

For more complex or critical calculations, I am designed to take external help by using a computational tool to ensure accuracy. This is a key feature of my architecture that allows me to reliably handle a wide range of mathematical tasks without hallucinating or making errors that a pure language model might.

This hybrid approach allows me to combine the strengths of both systems: the linguistic fluency and contextual understanding of an LLM with the precision and reliability of a dedicated computational engine.

Key Insight

When interacting with modern AI assistants, you're often engaging with a hybrid system that combines an LLM's predictive capabilities with various specialized tools. This integration allows the AI to transcend the limitations of a pure LLM.

The Importance of Context

One critical limitation of LLMs is that they don't inherently maintain context or "remember" previous interactions. Any appearance of memory is actually engineered through how the conversation history is managed.

Sample Chat API Implementation (Pure LLM)

import { GoogleGenAI } from "@google/genai"; const ai = new GoogleGenAI(); async function main() { const chat = await ai.chats.create({ model: "gemini-2.5-flash", history: [ { role: "user", parts: [{ text: "Hello" }], }, { role: "model", parts: [{ text: "Great to meet you. What would you like to know?" }], }, ], }); const response1 = await chat.sendMessage({ message: "I have 2 dogs in my house.", }); console.log("Chat response 1:", response1.text); const response2 = await chat.sendMessage({ message: "How many paws are in my house?", }); console.log("Chat response 2:", response2.text);} await main();When using an LLM directly through an API like this, you're accessing the pure prediction capabilities of the model without any additional tools or external data sources.

Direct API vs Platform Interfaces

There's an important distinction to understand when working with LLMs:

Pure LLM (SDK/API Usage)

- Direct interaction with the language model

- Limited to prediction capabilities only

- Cannot access current data or perform calculations

- Context management must be handled manually

- Used in the code example above

Platform Interface (ChatGPT, Gemini Web)

- Enhanced with external tools and APIs

- Can access current information (weather, date, etc.)

- Can perform calculations via code interpreters

- Automatic context management

- Additional safety measures and monitoring

Example Evidence

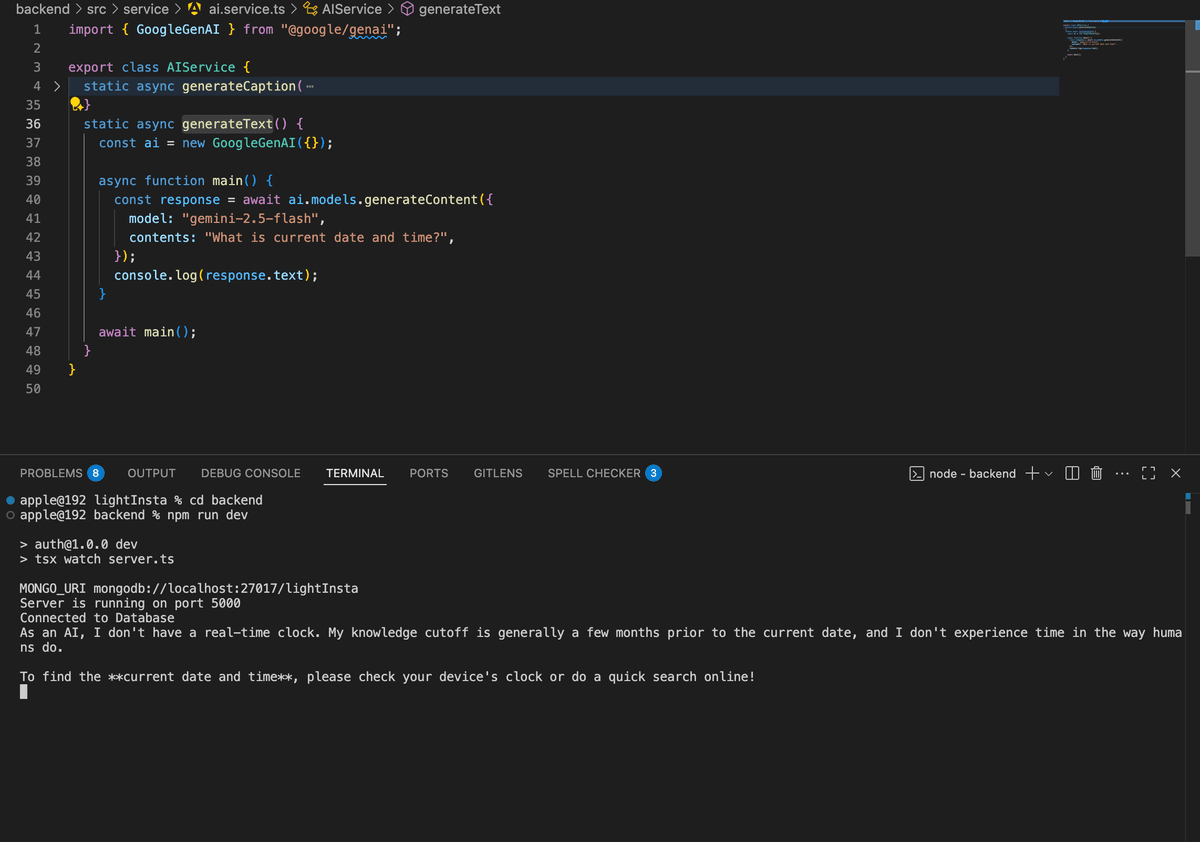

The screenshots below demonstrate this difference. When asking about the current date:

- In our code example, a pure LLM can't tell you the current date (it would guess based on training data)

- In the Gemini web interface, the platform augments the LLM with the current date information

Code Example: ai.service.ts

This server-side code asks "What is current date and time?" but the LLM does not have access to the actual current date.

Gemini Web Interface

The Gemini web interface correctly shows "Wednesday, August 6, 2025" because it uses external tools to access the current date.

To make these screenshots work, save your code example and Gemini screenshots as "server-code-screenshot.png" and "gemini-web-screenshot.png" in the /public/images folder. Click on any image to view it in full-screen mode.

Without Context

User

How many paws are in my house?

LLM

I don't have information about your house or its contents. To answer this question, I would need to know how many pets or animals you have in your house, as different animals have different numbers of paws.

Without previous context about the dogs, the model cannot answer the question accurately.

With Context

User (First Message)

I have 2 dogs in my house.

LLM

That's nice! Dogs make wonderful companions. Is there anything specific about your dogs you'd like to share or discuss?

User (Second Message)

How many paws are in my house?

LLM

Since you mentioned having 2 dogs in your house, and each dog has 4 paws, there would be a total of 8 dog paws in your house (2 dogs × 4 paws each = 8 paws).

With the context that the user has 2 dogs, the model can now provide an accurate answer.

When building applications with LLMs, it's crucial to maintain conversation history. Chat platforms like ChatGPT do this automatically, but if you're building a custom application using an LLM API, you need to explicitly manage the context by sending previous messages along with new ones.

Key Takeaways

What LLMs Can Do

- Predict text based on patterns in training data

- Generate human-like responses to various prompts

- Apply patterns recognized from training to new inputs

- Maintain context when properly engineered

- Generate creative content like stories or code

What LLMs Cannot Do (Without External Tools)

- Access real-time or current information

- Perform actual calculations (vs. predicting answers)

- Execute code or interact with external systems

- Access information beyond their training data

- Inherently remember previous conversations

Understanding the Hybrid Approach

Modern AI systems combine the predictive power of LLMs with specialized tools to create versatile, practical applications. This hybrid approach leverages the strengths of both systems: the linguistic fluency and contextual understanding of an LLM with the precision and capabilities of dedicated computational tools.

By understanding these fundamental limitations and capabilities, you can better interact with AI systems and build more effective applications that leverage LLMs appropriately.